Generate JSON Node

Overview

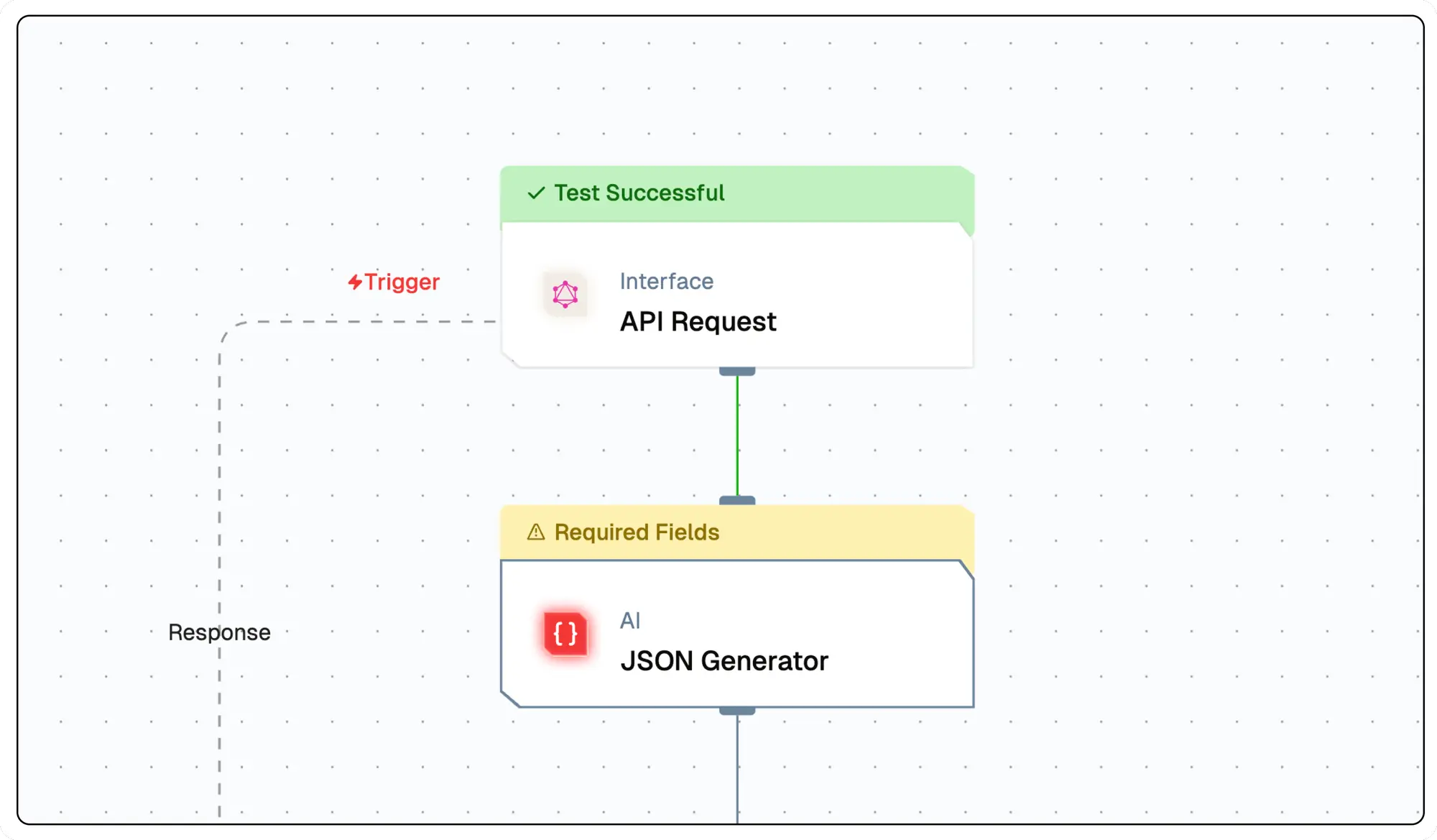

The Generate JSON Node is a specialized AI component that generates structured JSON data based on user input and predefined schemas. This node is particularly useful for applications requiring consistent data structures, API integrations, and automated data formatting.

Node Type Information

| Type | Description | Status |

|---|---|---|

| Batch Trigger | Starts the flow on a schedule or batch event. Ideal for periodic data processing. | ❌ False |

| Event Trigger | Starts the flow based on external events (e.g., webhook, user interaction). | ❌ False |

| Action | Executes a task or logic as part of the flow (e.g., API call, transformation). | ✅ True |

This node is an Action node that processes input data and generates structured JSON output based on predefined schemas.

Features

Key Functionalities

-

Generative Model Selection: Allows users to select credentials for their preferred generative model, ensuring flexibility and compatibility with various LLMs.

-

Customizable Prompts: Features a field for creating prompt templates, enabling tailored input for specific use cases.

-

System Prompt Definition: Includes an option to define a system-level prompt to guide the AI's behavior, ensuring context-aware and role-specific responses.

-

Additional Properties Management: Offers expandable sections for configuring advanced properties to fine-tune AI-generated outputs.

Benefits

-

Flexibility: Supports integration with multiple LLMs, allowing users to utilize different models based on their specific needs and use cases.

-

User-Centric Design: Provides intuitive interfaces for prompt customization and system prompt definition, making it accessible to both beginners and advanced users.

-

Enhanced Control: Enables precise control over the AI's behavior and output quality through customizable prompts and system configurations.

-

Scalability: Facilitates the creation of reusable prompt templates for diverse flow, ensuring consistency across projects.

What can I build?

- Create AI-driven applications that require structured data output from natural language inputs.

- Develop automated systems for generating specific data formats for use in analytics and reporting.

- Build interactive tools that convert conversational inputs into structured JSON for use in various applications.

- Design flow for content generation that require consistency in data formatting across different platforms.

Setup

Select the JSON Generate Node

- Fill in the required parameters.

- Build the desired flow

- Deploy the Project

- Click Setup on the workflow editor to get the automatically generated instruction and add it in your application.

Configuration Reference

| Parameter | Description | Example Value |

|---|---|---|

| Generative Model Name | Select the model to generate text based on the prompt. | Gemini Model |

| Output Schema (Zod JSON) | Define the output structure and validation rules using Zod JSON. | JSON Schema |

| User Prompt | Define the instructions for generating the text output.Define the instructions for generating the text output. | Tell me travel from Bali to Singapore |

| System Prompt | System prompt to guide the LLM | You are Travel Planner |

Low-Code Example

nodes:

- nodeId: InstructorLLMNode_774

nodeType: InstructorLLMNode

nodeName: JSON Generator

values:

schema: |-

{

"type": "object",

"properties": {

"output": {

"type": "string"

}

}

}

promptTemplate: tell me something about ${{triggerNode_1.output.topic}}

attachments: "[]"

messages: "[]"

generativeModelName:

provider_name: mistral

type: generator/text

credential_name: Mistral API

credentialId: 32bf5e3b-a8fc-4697-b95a-b1af3dcf7498

model_name: mistral/mistral-large-2402

needs:

- triggerNode_1Output Schema

_meta

- A nested object containing metadata about the processing of the generation request.

Token Usage Details

prompt_tokens: Number of tokens in the input prompt provided to the model.completion_tokens: Number of tokens in the generated output.total_tokens: Sum ofprompt_tokensandcompletion_tokens.

prompt_tokens_details

- A nested object providing a breakdown of token usage in the prompt.

cached_tokens: Number of tokens reused from a cache.audio_tokens: Number of tokens associated with audio input (if applicable).

completion_tokens_details

- A nested object detailing token usage in the generated output.

reasoning_tokens: Number of tokens used for reasoning processes (if applicable).audio_tokens: Number of tokens associated with audio output (if applicable).accepted_prediction_tokens: Number of tokens from accepted predictions (if applicable).rejected_prediction_tokens: Number of tokens from rejected predictions (if applicable).

Model Information

model_name: The name of the AI model used for generation.model_provider: The provider or organization supplying the model.

Example Output

{

"name": "Adelaide",

"_meta": {

"prompt_tokens": 56,

"completion_tokens": 7,

"total_tokens": 63,

"prompt_tokens_details": {

"cached_tokens": 0,

"audio_tokens": 0

},

"completion_tokens_details": {

"reasoning_tokens": 0,

"audio_tokens": 0,

"accepted_prediction_tokens": 0,

"rejected_prediction_tokens": 0

},

"model_name": "gpt-4-turbo",

"model_provider": "openai"

}

}Troubleshooting

Common Issues

| Problem | Solution |

|---|---|

| Invalid API Key | Ensure the API key is correct and has not expired. |

| Dynamic Content Not Loaded | Increase the Wait for Page Load time in the configuration. |

Debugging

- Check Lamatic Flow logs for error details.

- Verify API Key.