Crawler Node

Overview

The Crawler Node is a web scraping component that automatically extracts data from websites by crawling web pages and following links. This node enables automated data collection from web sources for analysis and processing.

Node Type Information

| Type | Description | Status |

|---|---|---|

| Batch Trigger | Starts the flow on a schedule or batch event. Ideal for periodic data processing. | ❌ False |

| Event Trigger | Starts the flow based on external events (e.g., webhook, user interaction). | ✅ True |

| Action | Executes a task or logic as part of the flow (e.g., API call, transformation). | ✅ True |

This node is an Action node that processes input data and classifies it into predefined categories using AI models.

This node is an Action node that crawls websites and extracts data from web pages for automated data collection.

Features

Key Functionalities

- Comprehensive Crawling: It recursively traverses websites, identifying and accessing all subpages to ensure thorough data collection. It begins with a specified URL, analyzes the sitemap (if available), and follows links to uncover all accessible subpages.

- Dynamic Content Handling: It effectively manages dynamic content rendered with JavaScript, ensuring comprehensive data extraction from all accessible subpages.

- Modular Design: Create reusable workflow components.

Benefits

- Reliability: It handles common web scraping challenges, including proxies, rate limits, and anti-scraping measures, ensuring consistent and dependable data extraction.

- Efficiency: It intelligently manages requests to minimize bandwidth usage and avoid detection, optimizing the data extraction process.

Prerequisites

Before using Crawler Node, ensure the following:

- A valid Firecrawl API Key (opens in a new tab).

- Access to the Firecrawl service host URL.

- Properly configured credentials for Firecrawl.

- A webhook endpoint for receiving notifications (required for the crawler).

Setup

Step 1: Obtain API Credentials

- Register on Firecrawl (opens in a new tab).

- Generate an API key from your account dashboard.

- Note the Host URL and Webhook Endpoint.

Step 2: Configure Firecrawl Credentials

Use the following format to set up your credentials:

| Key Name | Description | Example Value |

|---|---|---|

| Credential Name | Name to identify this set of credentials | my-firecrawl-creds |

| Firecrawl API Key | Authentication key for accessing Firecrawl services | fc_api_xxxxxxxxxxxxx |

| Host | Base URL where Firecrawl service is hosted | https://api.firecrawl.dev |

Setup 3: Setup the Mode

Configuration Reference

This is the base configuration for the crawler node, regardless of the mode you choose.

| Parameter | Description | Example Value |

|---|---|---|

| Credential Name | Select previously saved credentials | my-firecrawl-creds |

| URL | Starting point URL for the crawler | https://example.com |

| Exclude Path | URL patterns to exclude from the crawl | "admin/*", "private/*" |

| Include Path | URL patterns to include in the crawl | "blog/*", "products/*" |

| Crawl Depth | Maximum depth to crawl relative to the entered URL | 3 |

| Crawl Limit | Maximum number of pages to crawl | 1000 |

| Crawl Sub Pages | Toggle to enable or disable crawling sub pages | true |

| Max Discovery Depth | Maximum depth for discovering new URLs during the crawl | 5 |

| Ignore Sitemap | Ignore the sitemap.xml file for crawling | false |

| Allow Backward Links | Allow crawling backward links (e.g., from a blog post to the homepage) | true |

| Allow External Links | Allow crawling external links (e.g., links to other domains) | false |

| Ignore Query Parameters | Don't re-scrape the same path with different (or none) query parameters | false |

| Delay | Delay between requests to avoid overloading the server | 2 (seconds) |

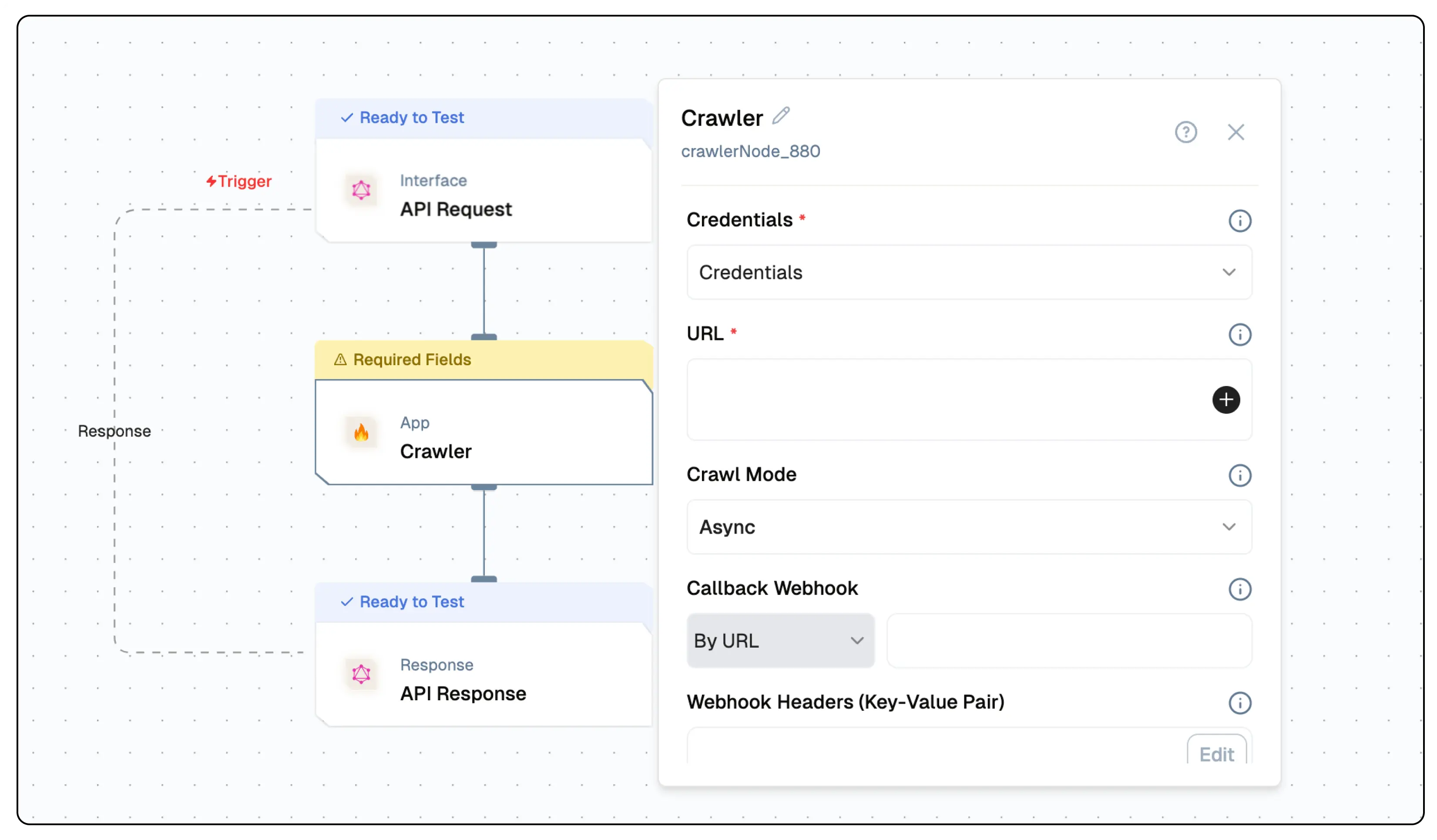

You can choose between sync and async mode. If you choose async mode, you need to set up a webhook endpoint to receive the crawl results. For that, you would create a Webhook flow/url to receive crawl updates and results.

In case of async mode, the user will have the option to choose the following configurations :

| Parameter | Description | Example Value |

|---|---|---|

| Credential Name | Select previously saved credentials | my-firecrawl-creds |

| Callback Webhook | URL to receive notifications about crawl completion | https://example.com/webhook |

| Webhook Headers | Headers to be sent to the webhook | {'Content-Type:application/json'} |

| Webhook Metadata | Metadata to be sent to the webhook | {'status':'{{codeNode_540.status}}'} |

| Webhook Events | A multiselect list of events to be sent to the webhook | ["completed", "failed", "page", "started"] |

Low-Code Example

nodes:

- nodeId: crawlerNode_880

nodeType: crawlerNode

nodeName: Crawler

values:

credentials: ''

url: ''

crawlMode: async

webhook: ''

webhookHeaders: ''

webhookMetadata: ''

webhookEvents:

- completed

- failed

- page

- started

crawlSubPages: false

crawlLimit: 10

crawlDepth: 1

excludePath: []

includePath: []

maxDiscoveryDepth: 1

ignoreSitemap: false

allowBackwardLinks: false

allowExternalLinks: false

ignoreQueryParameters: false

delay: 0

modes:

webhook: url

needs:

- triggerNode_1Output

Event Trigger Output

Now, there are four events that can be triggered from the crawler node. The events are as follows:

- Started : This event is triggered when the crawl process begins. It provides information about the crawl job, including the job ID and the URL being crawled.

- Page : This event is triggered for each page that is crawled. It provides details about the crawled page, including the URL and any extracted data.

- Completed : This event is triggered when the crawl process is completed. It provides a summary of the crawl job, including the total number of pages crawled and any errors encountered.

- Failed : This event is triggered when the crawl process fails. It provides information about the error that occurred, including the error message and the job ID.

Example Event Output - Started

{

"success": true,

"type": "crawl.started",

"id": "82***********************4",

"data": [],

"metadata": {}

}Example Event Output - Page

{

"success":true,

"type":"crawl.page",

"id":"82***********************4",

"data":[

`{

"markdown":"Docs\n\nFlows\n\n# Lamatic Flows",

"metadata":`{"ogUrl":"https://lamatic.ai/docs/flows",

"og:title":["Lamatic Flows - Lamatic.ai Docs","just-footer"],

"ogDescription":"Flow builder in Lamatic.ai Studio",

"twitter:url":"https://lamatic.ai",

"twitter:image":"https://lamatic.ai/api/og?title=Lamatic%20Flows&description=Flow%20builder%20in%20Lamatic.ai%20Studio§ion=Docs",

"twitter:site:domain":"lamatic.ai",

"ogTitle":"Lamatic Flows - Lamatic.ai Docs",

"robots":"index,follow",

"viewport":["width=device-width, initial-scale=1.0, viewport-fit=cover",

"width=device-width, initial-scale=1"],

"og:description":["Flow builder in Lamatic.ai Studio","Flow builder in Lamatic.ai Studio"],

"title":"Lamatic Flows - Lamatic.ai Docs",

"ogImage":"https://lamatic.ai/api/og?title=Lamatic%20Flows&description=Flow%20builder%20in%20Lamatic.ai%20Studio§ion=Docs",

"next-head-count":"22",

"og:url":"https://lamatic.ai/docs/flows",

"og:image":"https://lamatic.ai/api/og?title=Lamatic%20Flows&description=Flow%20builder%20in%20Lamatic.ai%20Studio§ion=Docs",

"twitter:title":"just-footer",

"description":["Flow builder in Lamatic.ai Studio","Flow builder in Lamatic.ai Studio"],

"theme-color":"#000",

"twitter:card":"summary_large_image",

"favicon":"https://lamatic.ai/public/favicon-32x32.png",

"scrapeId":"3dbb0e15-d286-4857-ae3e-004940418975",

"sourceURL":"https://lamatic.ai/docs/flows",

"url":"https://lamatic.ai/docs/flows",

"statusCode":200

}`

}

],

"metadata":{}`

}Event Output - Completed

{

"success": true,

"type": "crawl.completed",

"id": "82***********************4",

"data": [],

"metadata": {}

}Event Output - Failed

{

"success": false,

"type": "crawl.failed",

"id": "82***********************4",

"error": 'Error Occurred'

"data": [],

"metadata": {}

}Output Schema

Async Mode

success: A boolean indicating whether the crawl operation was successfully initiated.id: A unique identifier assigned to the crawl job.url: The API endpoint URL associated with the crawl job, used for tracking or retrieving results.

Example Output

{

"success": true,

"id": "8***************************7",

"url": "https://api.firecrawl.dev/v1/crawl/8***************************7"

}Sync Mode

success: A boolean indicating whether the crawl operation was successfully completed.status: The status of the crawl operation (e.g., "completed").completed: The number of pages successfully crawled.total: The total number of pages that were attempted to be crawled.creditsUsed: The number of credits consumed during the crawl operation.expiresAt: The expiration date and time of the crawl job.data: An array containing the crawled data, including metadata and extracted information.

Example Output

{

"success": true,

"status": "completed",

"completed": 1,

"total": 1,

"creditsUsed": 1,

"expiresAt": "2025-05-15T08:12:44.000Z",

"data": [

{

"markdown": "Docs\n\nContributing\n\n# Contributing to Lamatic.ai Documentation\n\nWe're thrilled that you're interested in contributing to the Lamatic.ai documentation! Your efforts help improve the experience for all users of our platform. This guide will walk you through the process of contributing.\n\n## Getting Started [Permalink for this section](https://lamatic.ai/docs/contributing\\#getting-started)\n\n1. **Understand Our Docs Structure**\n - Our documentation is built using Nextra, a Next.js and MDX-powered static site generator. To get a better understanding of how our docs work, visit our repository at [https://github.com/lamatic/lamatic-docs (opens in a new tab)](https://github.com/lamatic/lamatic-docs) and learn more about Nextra at [https://nextra.site/docs (opens in a new tab)](https://nextra.site/docs).\n2. **Join Our Community**\n\n\n - Have questions or need help? Join our Slack community. We're always happy to assist contributors!\n\n[Join our Slack](https://lamatic.ai/docs/slack)\n\n3. **Get Beta Access**\n - As a token of our appreciation, we'd like to offer all contributors beta access to our platform. Use this link to sign up: [https://studio.lamatic.ai/signup?code=earlybetauser (opens in a new tab)](https://studio.lamatic.ai/signup?code=earlybetauser)\n\n## Steps to Contribute [Permalink for this section](https://lamatic.ai/docs/contributing\\#steps-to-contribute)\n\n1. **Fork the Repository**\n - Visit the [Lamatic Docs GitHub repository (opens in a new tab)](https://github.com/lamatic/lamatic-docs).\n - Click the \"Fork\" button in the top-right corner to create a copy of the repository in your GitHub account.\n2. **Clone Your Fork**\n - Clone your forked repository to your local machine:\n\n\n\n ```nx-border-black nx-border-opacity-[0.04] nx-bg-opacity-[0.03] nx-bg-black nx-break-words nx-rounded-md nx-border nx-py-0.5 nx-px-[.25em] nx-text-[.9em] dark:nx-border-white/10 dark:nx-bg-white/10\n git clone https://github.com/your-username/lamatic-docs.git\n cd lamatic-docs\n ```\n3. **Create a New Branch**\n - Create a new branch for your changes:\n\n\n\n ```nx-border-black nx-border-opacity-[0.04] nx-bg-opacity-[0.03] nx-bg-black nx-break-words nx-rounded-md nx-border nx-py-0.5 nx-px-[.25em] nx-text-[.9em] dark:nx-border-white/10 dark:nx-bg-white/10\n git checkout -b your-branch-name\n ```\n\n - Use a descriptive name for your branch, e.g., `add-new-feature-doc` or `fix-typo-in-quickstart`.\n4. **Install Dependencies and Run the Development Server**\n - Install the necessary dependencies:\n\n\n\n ```nx-border-black nx-border-opacity-[0.04] nx-bg-opacity-[0.03] nx-bg-black nx-break-words nx-rounded-md nx-border nx-py-0.5 nx-px-[.25em] nx-text-[.9em] dark:nx-border-white/10 dark:nx-bg-white/10\n npm install\n ```\n\n - Start the development server:\n\n\n\n ```nx-border-black nx-border-opacity-[0.04] nx-bg-opacity-[0.03] nx-bg-black nx-break-words nx-rounded-md nx-border nx-py-0.5 nx-px-[.25em] nx-text-[.9em] dark:nx-border-white/10 dark:nx-bg-white/10\n npm run dev\n ```\n\n - Open your browser and go to `http://localhost:3333/docs` to see your changes in real-time.\n5. **Make Your Changes**\n - Edit or add the necessary files in your local repository.\n - Ensure your changes adhere to the existing style and formatting of the documentation.\n - If you're adding new documentation, update the `_meta.json` file to include the new page.\n - If you're modifying existing documentation, update the corresponding `.mdx` file.\n - Refresh your browser to see your changes reflected immediately.\n6. **Commit Your Changes**\n - Stage and commit your changes:\n\n\n\n ```nx-border-black nx-border-opacity-[0.04] nx-bg-opacity-[0.03] nx-bg-black nx-break-words nx-rounded-md nx-border nx-py-0.5 nx-px-[.25em] nx-text-[.9em] dark:nx-border-white/10 dark:nx-bg-white/10\n git add .\n git commit -m \"Brief description of your changes\"\n ```\n7. **Push Your Changes**\n - Push your changes to your forked repository:\n\n\n\n ```nx-border-black nx-border-opacity-[0.04] nx-bg-opacity-[0.03] nx-bg-black nx-break-words nx-rounded-md nx-border nx-py-0.5 nx-px-[.25em] nx-text-[.9em] dark:nx-border-white/10 dark:nx-bg-white/10\n git push origin your-branch-name\n ```\n8. **Create a Pull Request**\n - Go to the [original Lamatic Docs repository (opens in a new tab)](https://github.com/lamatic/lamatic-docs).\n - Click on \"Pull requests\" and then the \"New pull request\" button.\n - Click \"compare across forks\" and select your fork and branch.\n - Review your changes and click \"Create pull request\".\n - Provide a clear title and description for your pull request.\n9. **Wait for Review**\n - The Lamatic.ai team will review your contribution.\n - They may request changes or provide feedback.\n - Once approved, your changes will be merged into the main documentation.\n\n## Issue Types [Permalink for this section](https://lamatic.ai/docs/contributing\\#issue-types)\n\nWhen contributing or reporting issues, it's helpful to categorize them. Here are the main types of issues you might encounter or want to report:\n\n1. **🐛 Bug**: An error, flaw, or fault in the documentation that produces an incorrect or unexpected result. This could include broken links, incorrect information, or formatting issues.\n\n2. **🚀 Feature Request**: A suggestion for a new addition to the documentation. This could be a request for documentation on a new feature of Lamatic.ai or a proposal for a new section in the existing docs.\n\n3. **📈 Improvement**: A suggestion to enhance existing documentation. This could involve clarifying explanations, adding more examples, or restructuring content for better readability.\n\n4. **✏️ Typo**: A small error in the text, such as a misspelling or grammatical mistake.\n\n\nWhen creating an issue or pull request, please prefix your title with the appropriate issue type in square brackets or assign appropriate labels. For example:\n\n- \\[Bug\\] Broken link in Quick Start guide\n- \\[Feature Request\\] Add documentation for new API endpoint\n- \\[Improvement\\] Clarify explanation in Authentication section\n- \\[Typo\\] Fix misspelling in Contributing guide\n\nThis categorization helps the maintainers prioritize and address issues more efficiently.\n\nThank you for contributing to Lamatic.ai documentation! Your efforts are greatly appreciated and help make our platform better for everyone. If you have any questions during the process, don't hesitate to reach out on our Slack channel.\n\nLast updated on April 24, 2025\n\nPlatform Status ↗ [Bug Bounty Program](https://lamatic.ai/docs/vulnerability-disclosure \"Bug Bounty Program\")\n\n### Was this page useful?\n\nYesCould be better\n\n### Questions? We're here to help\n\n[FeedbackOpenAI](https://product.lamatic.ai/) [Email](mailto:[email protected]) [Talk to sales](https://lamatic.ai/docs/demo)\n\n### Subscribe to updates\n\nGet updates\n\njust-footer",

"metadata": {

"twitter:url": "https://lamatic.ai",

"next-head-count": "22",

"twitter:site:domain": "lamatic.ai",

"ogImage": "https://lamatic.ai/api/og?title=Contributing%20to%20Lamatic.ai%20Documentation&description=Contributing%20to%20Lamatic.ai%20Documentation§ion=Docs",

"ogTitle": "Contributing to Lamatic.ai Documentation - Lamatic.ai Docs",

"twitter:title": "just-footer",

"ogDescription": "Contributing to Lamatic.ai Documentation",

"description": [

"Contributing to Lamatic.ai Documentation",

"Contributing to Lamatic.ai Documentation"

],

"og:url": "https://lamatic.ai/docs/contributing",

"ogUrl": "https://lamatic.ai/docs/contributing",

"og:description": [

"Contributing to Lamatic.ai Documentation",

"Contributing to Lamatic.ai Documentation"

],

"viewport": [

"width=device-width, initial-scale=1.0, viewport-fit=cover",

"width=device-width, initial-scale=1"

],

"favicon": "https://lamatic.ai/public/favicon-32x32.png",

"title": "Contributing to Lamatic.ai Documentation - Lamatic.ai Docs",

"robots": "index,follow",

"twitter:card": "summary_large_image",

"twitter:image": "https://lamatic.ai/api/og?title=Contributing%20to%20Lamatic.ai%20Documentation&description=Contributing%20to%20Lamatic.ai%20Documentation§ion=Docs",

"og:title": [

"Contributing to Lamatic.ai Documentation - Lamatic.ai Docs",

"just-footer"

],

"theme-color": "#000",

"og:image": "https://lamatic.ai/api/og?title=Contributing%20to%20Lamatic.ai%20Documentation&description=Contributing%20to%20Lamatic.ai%20Documentation§ion=Docs",

"scrapeId": "332e0d70-915e-44df-bc34-7c888543add8",

"sourceURL": "https://lamatic.ai/docs/contributing",

"url": "https://lamatic.ai/docs/contributing",

"statusCode": 200

}

}

]

}Troubleshooting

Common Issues

| Problem | Solution |

|---|---|

| Invalid API Key | Ensure the API key is correct and has not expired. |

| Connection Issues | Verify that the host URL is correct and reachable. |

| Webhook Errors | Check if the webhook endpoint is active and correctly configured. |

| Crawling Errors | Review the inclusion/exclusion paths for accuracy. |

| Dynamic Content Not Loaded | Increase the Wait for Page Load time in the configuration. |

Debugging

- Check Firecrawl logs for detailed error information.

- Test the webhook endpoint to confirm it is receiving updates.