Slack Internal Support Bot with Scraper

Difficulty Level

Nodes

Tags

Try out these flows yourself at Lamatic.ai. Sign up for free and start building your own AI workflows.

Add Flow 1 to LamaticAdd Flow 2 to LamaticIn this tutorial, you'll learn how to build a bot connected with your slack channel that can answer questions based on your documentation using Lamatic.ai and RAG technology.

What you'll Build

- You'll utilize Lamatic.ai Studio.

- Build Slack Webhook using RAG LLM.

- Implement RAG with FireCrawl Scraper

Getting Started

1. Account Creation and Create a New Flow

- Sign up at Lamatic.ai (opens in a new tab) and log in.

- Navigate to the Projects and click New Project or select your desired project.

- You'll see different sections like Flows, Context, and Connections

- Select the "Create from Scratch"

- You will have to create 8 nodes, which are to be built and configured: API (Trigger Node), Scraper Node, Chunking Node, Extract Chunks (Code Node), Vectorise Node, Transform Metadata (Code Node), Index Node and API Response.

2. API Trigger Node

- Modify the API schema to take the required input parameters, "url" and "filename".

- Here, the filename is the main content that is given in the "url", so you can name it as per your requirement.

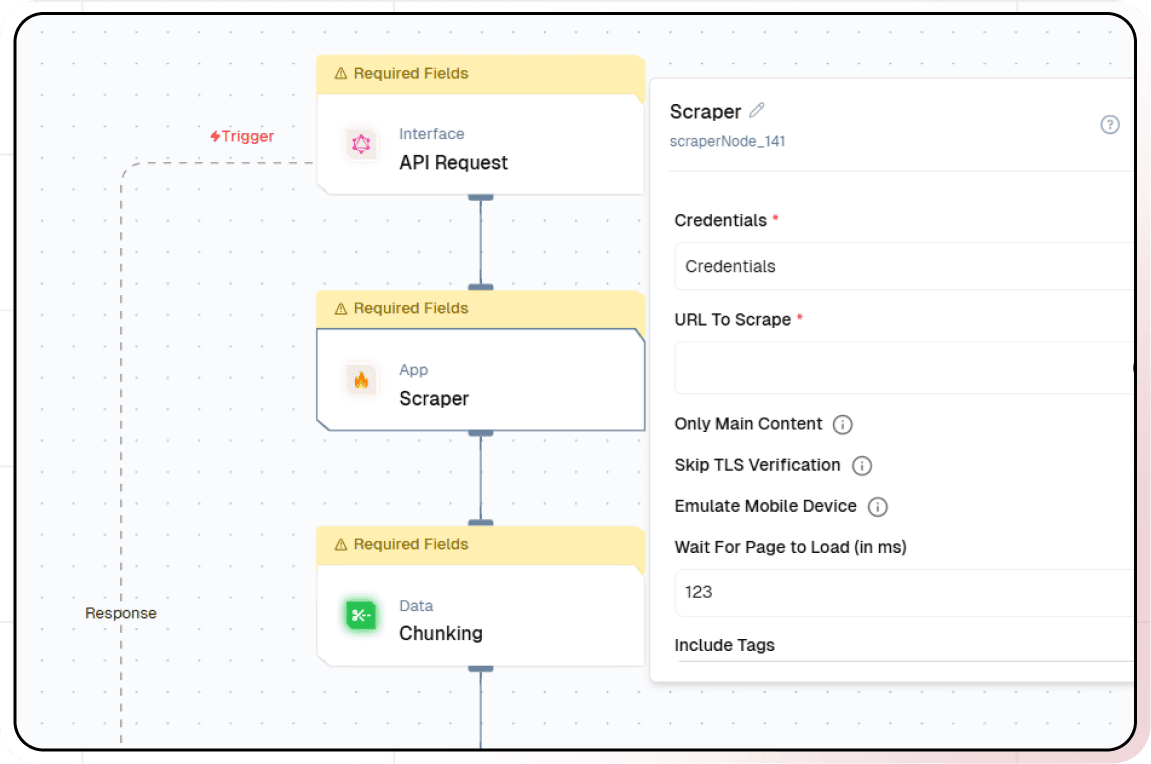

3. Scraper Node

- Authorize Lamatic AI with your FireCrawl API at the "Scraper" Node by providing the required credentials.

- Add the "url" passed from the API Trigger Node to the "URL to Scrape" setting and toggle the "Only Main Content" option on.

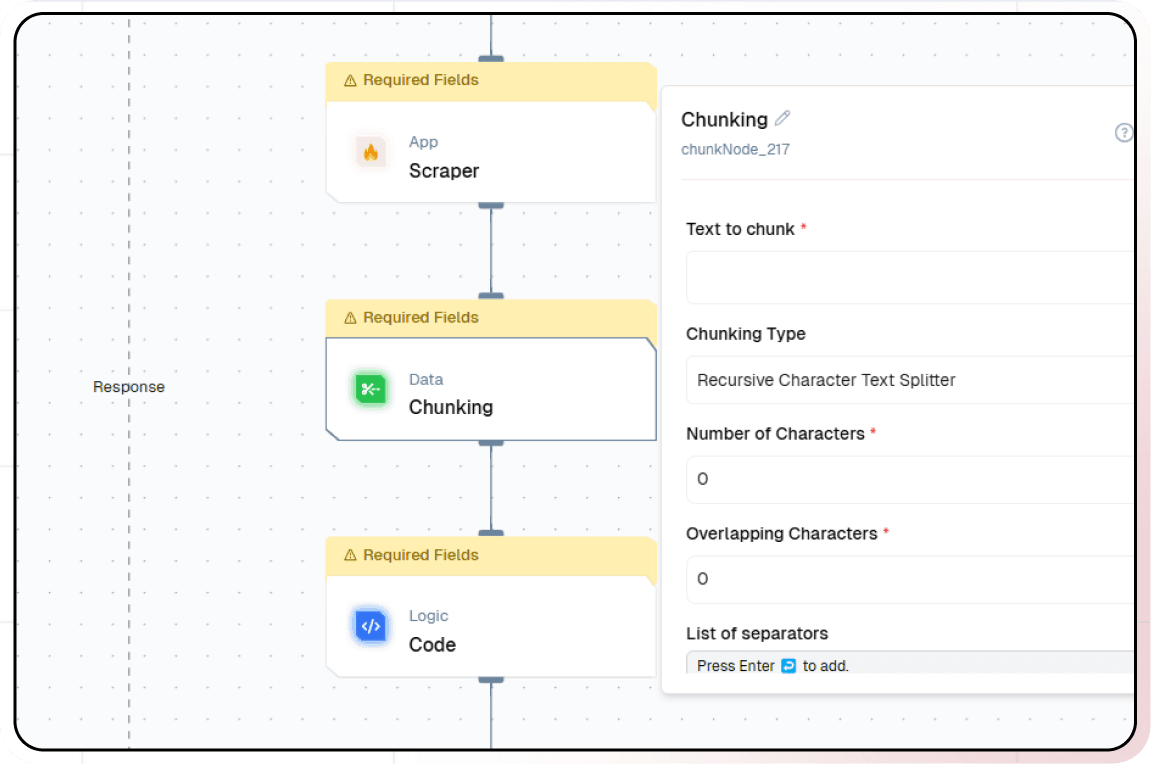

4. Data Chunking

Data chunking is a critical process that determines how effectively your chatbot can understand and retrieve information. Think of chunking as breaking down a book into logical chapters and paragraphs — it needs to be done thoughtfully to maintain context and meaning.

The chunking process involves sophisticated algorithms that analyze your documents and break them down into smaller, meaningful pieces. These chunks need to be large enough to maintain context but small enough to be specific and relevant when retrieved. Getting this balance right is crucial for your chatbot’s performance.

- Navigate to the “Chunking” node.

- Click the plus icon to select the data and provide the text you want to chunk.

- Set your chunking parameters and adjust other default options under Additional Properties.

- Click on the “Extract Chunks" node, where we will extract data from the Chunks by applying the specified logic.

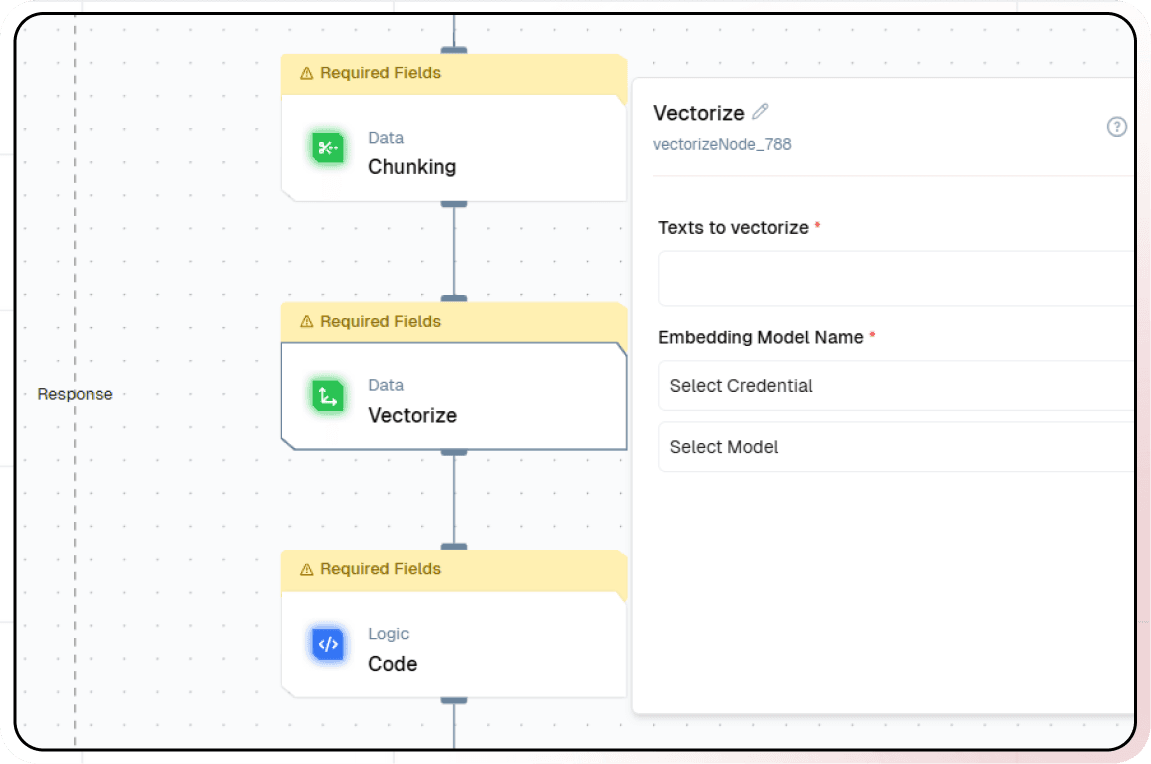

5. Vector the Data

Vectorization is where we transform text into a format that AI can understand and process efficiently. This step is like creating a detailed map of your knowledge base that the AI can navigate quickly and accurately.

- Click on the "Vectorize" node.

- Click the plus (+) icon to choose the data and input the text you want to vectorize.

- Select the embedding model. For this example, we’ll use OpenAI’s text-embedding-3-small.

6. Transform Metadata

Transforming metadata is like creating a detailed index for your knowledge base. This step ensures that your chatbot can quickly and accurately retrieve the information it needs to answer questions.

- Click on the "Transform MetaData" node to transform the metadata of the extracted data, so as to make it more readable and understandable.

7. Store Vector Data in the Vector Database

The database storage step is where your vectorized knowledge becomes organized and readily accessible. This is similar to creating a highly efficient library catalog system, but instead of organizing books by author or subject, we’re organizing information by semantic meaning and relevance.

-

Click on the "Index" node

-

Select the desired Vector Database

-

Add Vectors, Metadata, and a Primary Key by clicking the “+” icon

8. Testing

Click the “Test” button to test the Node,

Pass url and filename for the testing purpose.

url: Represents the URL of the document you want to extract data from.filename: Serves as the name of the document you want to extract data from, this should be unique for each document/link.

For testing, you need to manually provide url and filename as dummy data. Once deployed, these values must be passed to your API trigger node from the external application.

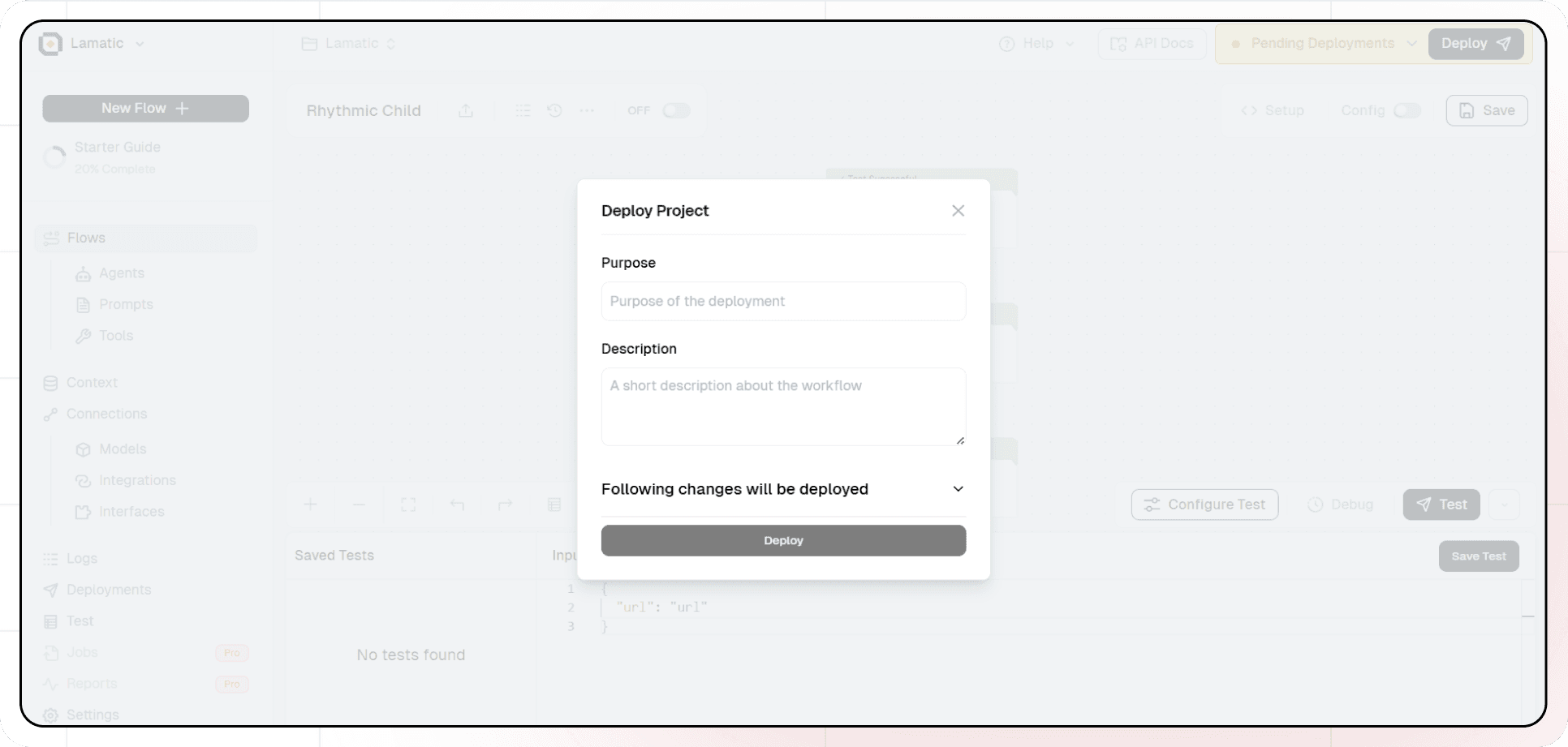

9. Deployment

- Click the Deploy button

- Your API is now ready to be integrated into Node.js or Python applications

- Your flow will run on Lamatic's global edge network for fast, scalable performance

Then deploy your flow to make it accessible for API endpoint. You can check the logs in the logs section.

10. Create a New Chatbot Flow to Implement RAG

Now it’s time to create the chatbot that will utilize your knowledge base through RAG technology.

This step is where we bring together all the previous components to create an intelligent chatbot that can understand and respond to queries using your knowledge base. RAG (Retrieval-Augmented Generation) is the key technology that allows your chatbot to combine its understanding of language with your specific knowledge base.

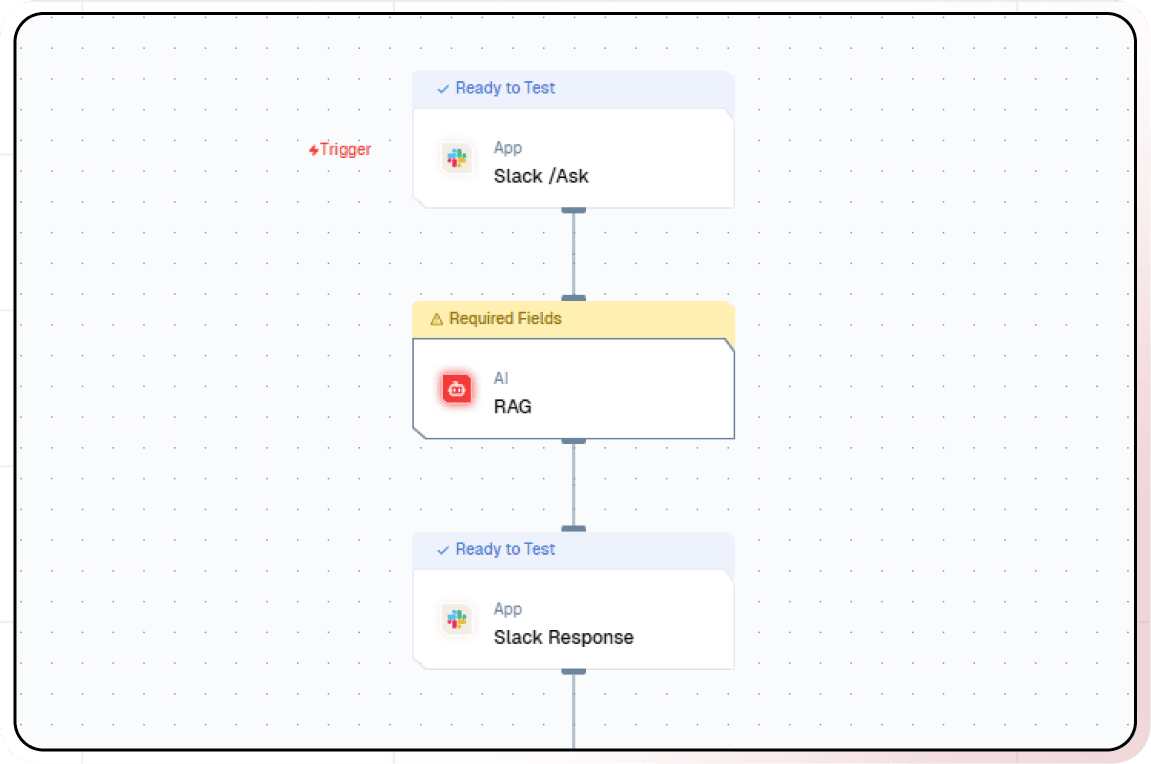

- Create a new flow named “Slack Support Bot” or Select the Flow Template of Slack Ask Bot (Template (opens in a new tab))

- Add a “Slack” node

- Add RAG Node and Select the Database, Pass query, Select Respective LLM

- Add a response "Slack" node to send the response back to the slack channel

11. Test and Deploy Flow

Thorough testing ensures your chatbot provides accurate and helpful responses. Once you’ve tested the flow with your slack channel, click the Deploy button to make it live. Here you pass the deployment message.

You've successfully built your own intelligent document slack bot!