Custom Media Chatbot

Difficulty Level

Intermediate

Nodes

Extract From FileText LLM

Tags

Support

💡

Try out this flow yourself at Lamatic.ai. Sign up for free and start building your own AI workflows.

Add to LamaticIn this tutorial, you'll learn how to build a document based chatbot that can answer questions based on your media file content in a ready made chat interface.

What you'll Build

- You'll utilize Lamatic.ai Studio.

- Build Chatbot Widget to answer questions based on your media files.

- Implement RAG with Extract File and Text LLM

Getting Started

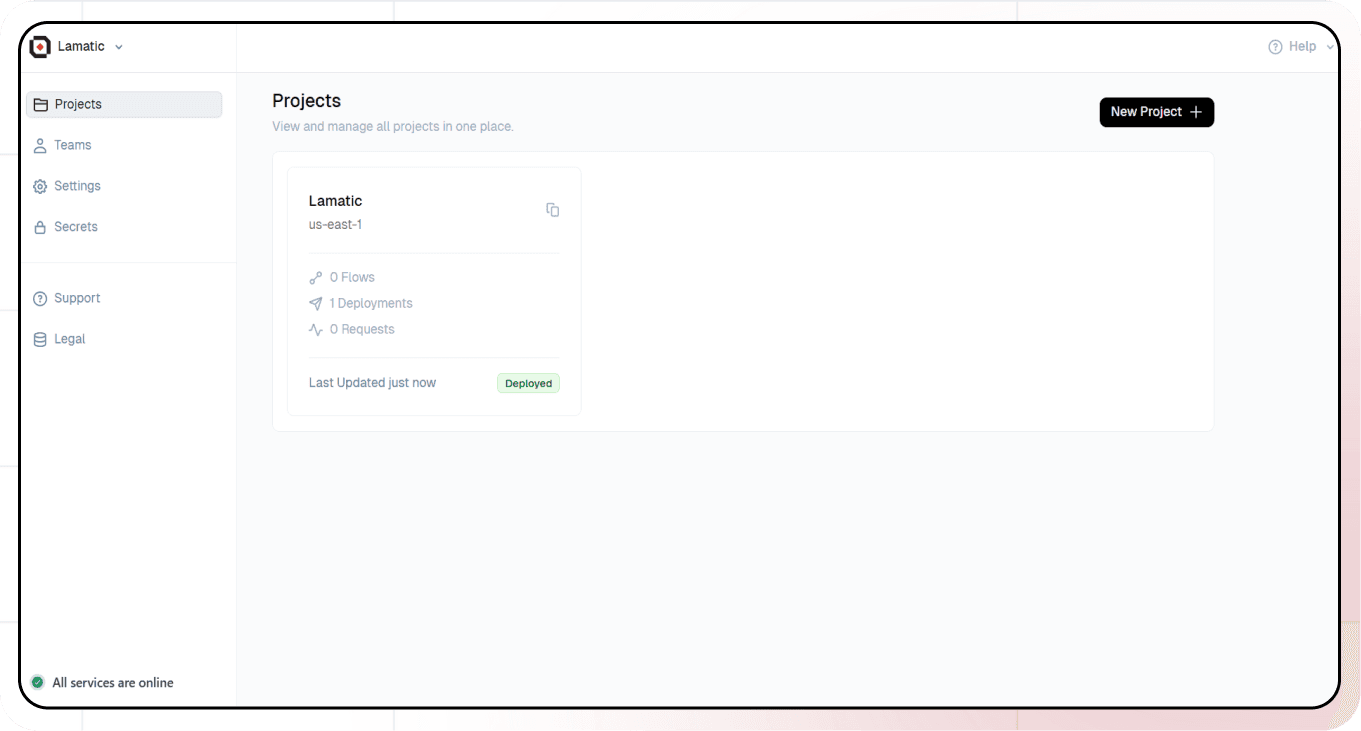

1. Account Creation and Create a New Flow

- Sign up at Lamatic.ai (opens in a new tab) and log in.

- Navigate to the Projects and click New Project or select your desired project.

- You'll see different sections like Flows, Context, and Connections

- Select the "Create from Scratch"

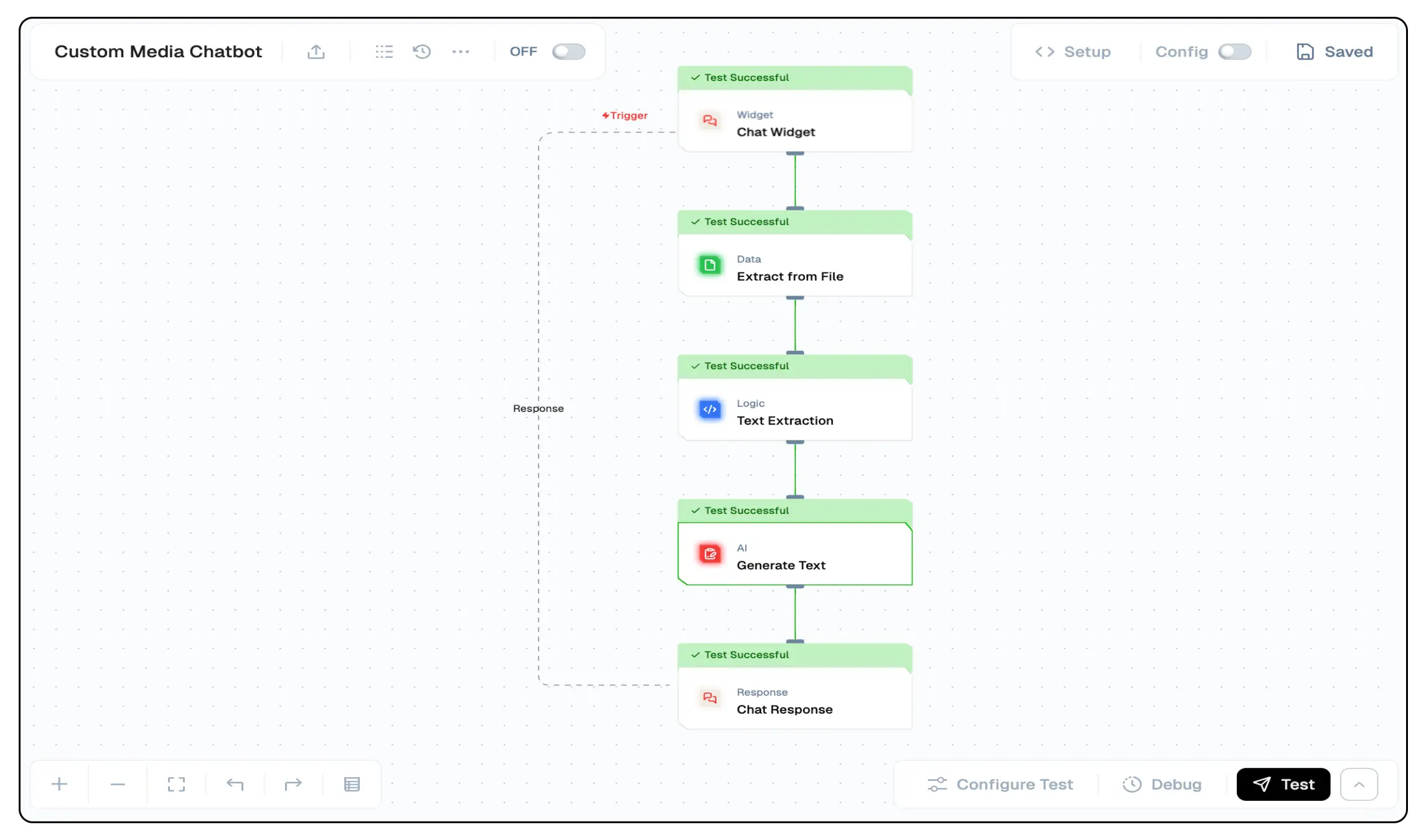

- You will have to create 4 nodes - Chatbot Widget (Trigger Node), Extract File (Extract File Node), Extract Text (Code Node), Text LLM (AI Node), and Chat Response (Response Node).

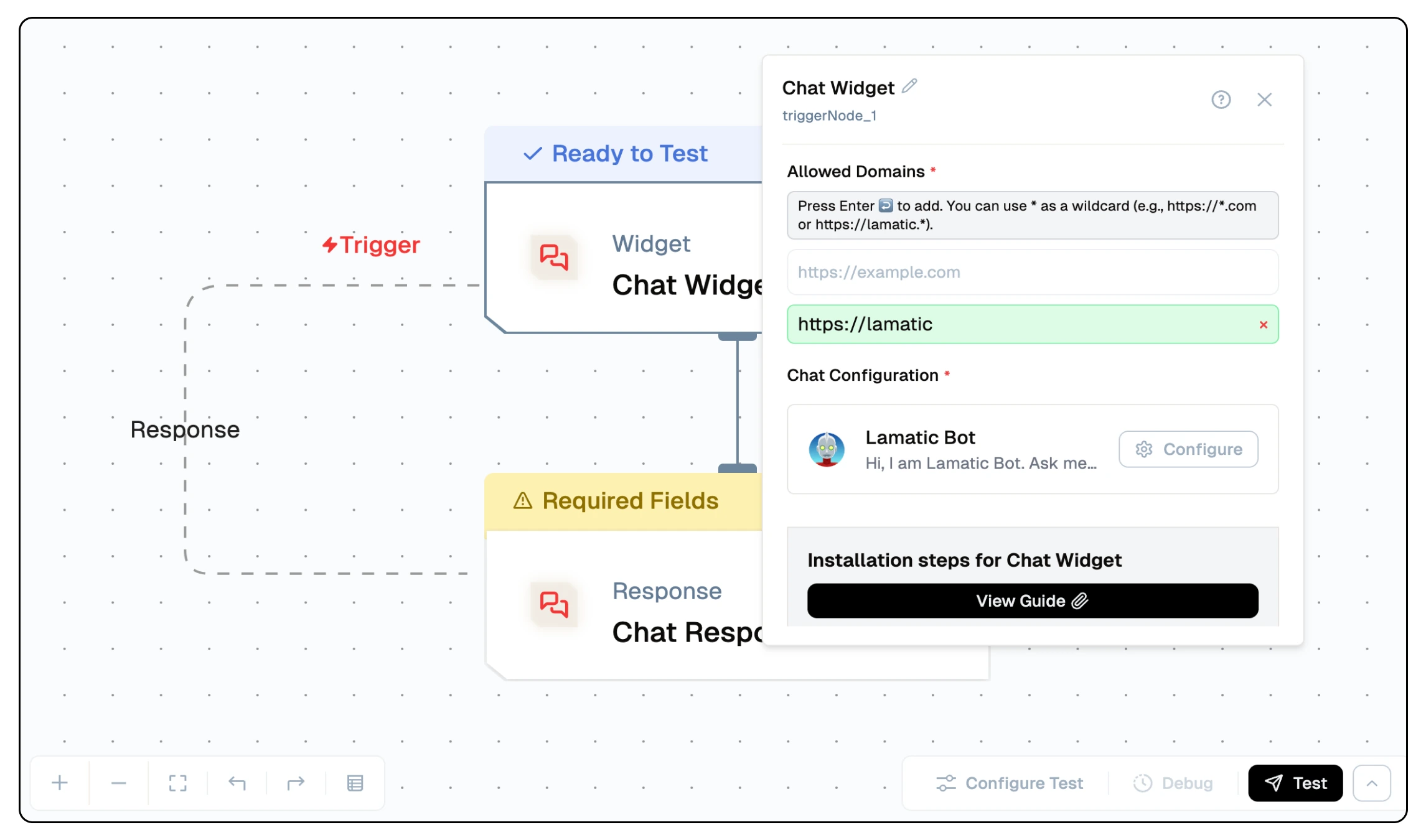

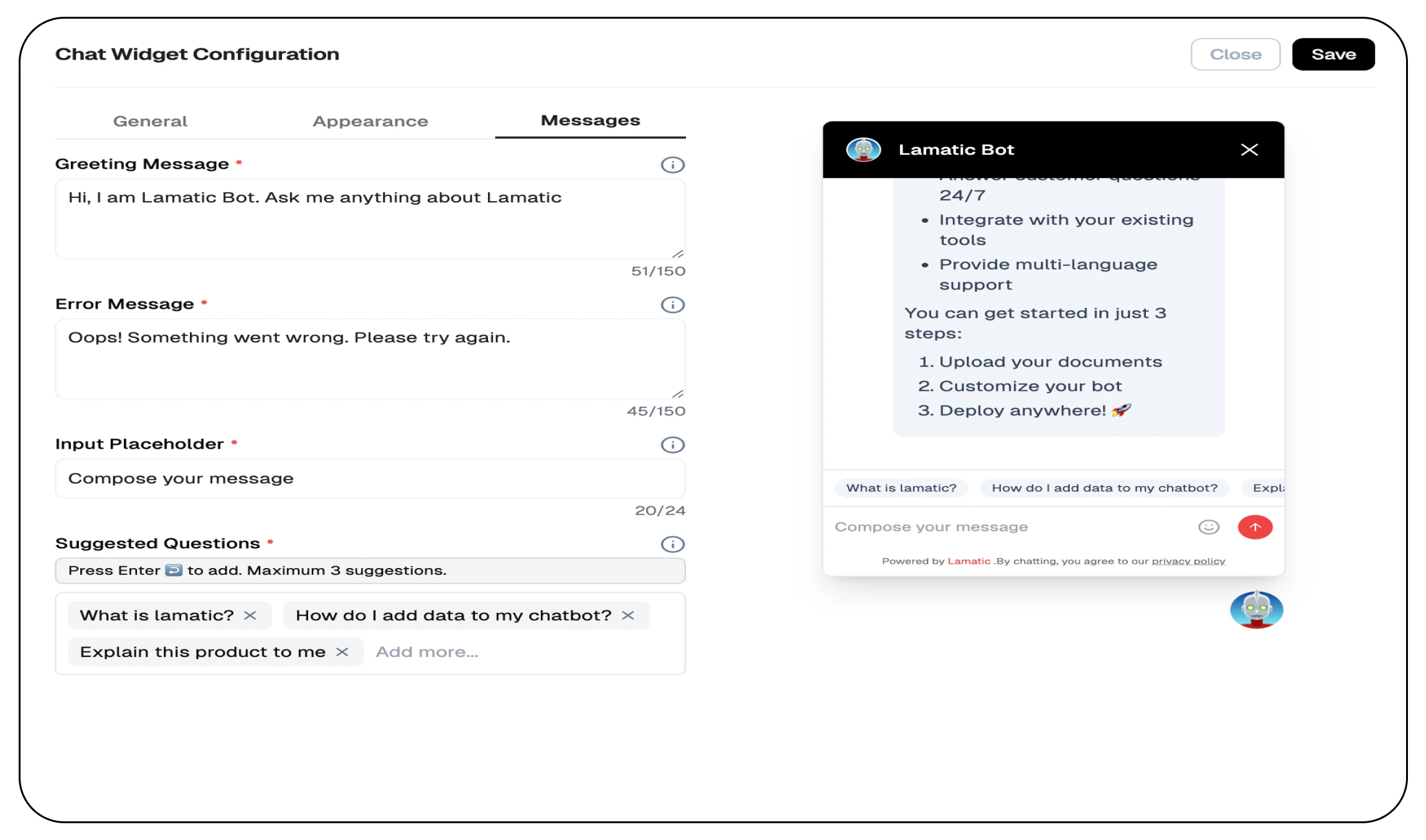

2. Chatbot Widget

- Add the chatbot widget to your flow as a trigger node, and configure the input domains.

- You will be choosing the allowed domains for the chatbot widget to be embedded in or use wildcard (*) to allow all domains.

- Along with that, you can customise the appearance of the widget, including the color, position, and other settings.

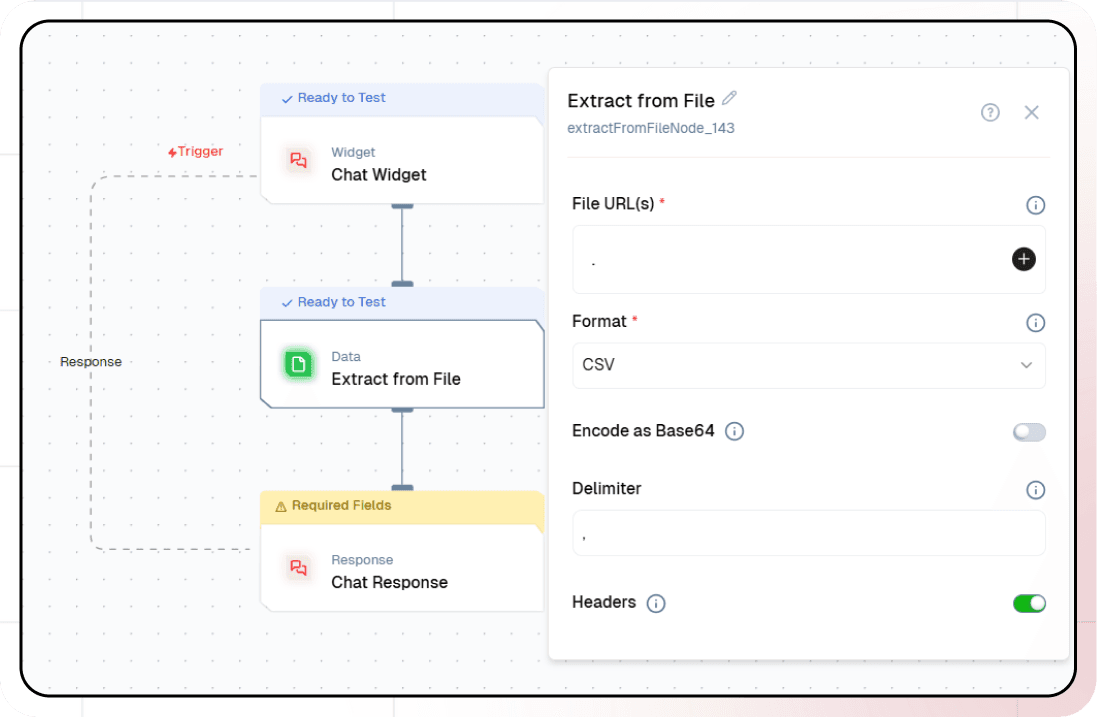

3. Extract File Node

- Add the "Extract File" node to your flow.

- Configure the file extraction settings to take the url and type of file. If using a PDF, select 'Join Pages' to extract the text from all pages.

- The file contents and details will be extracted and are to be passed to extract textual content from the file.

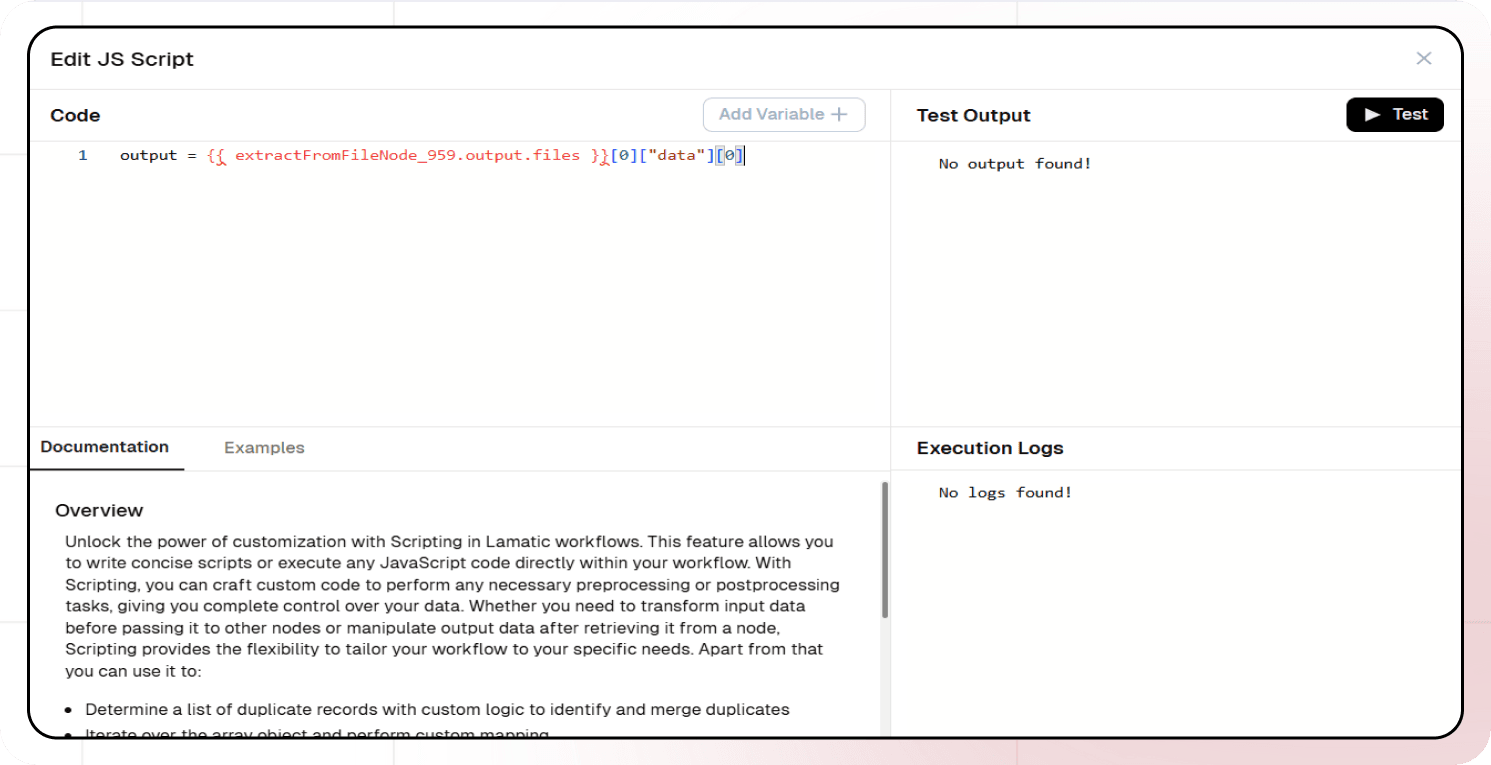

4. Extract Text Node

- Add the "Code" node to your flow.

- Write the following script to extract the text from the file content:

output = {{ extractFromFileNode_959.output.files }}[0]["data"][0]- The extracted text will be passed to the Text LLM node for generating responses.

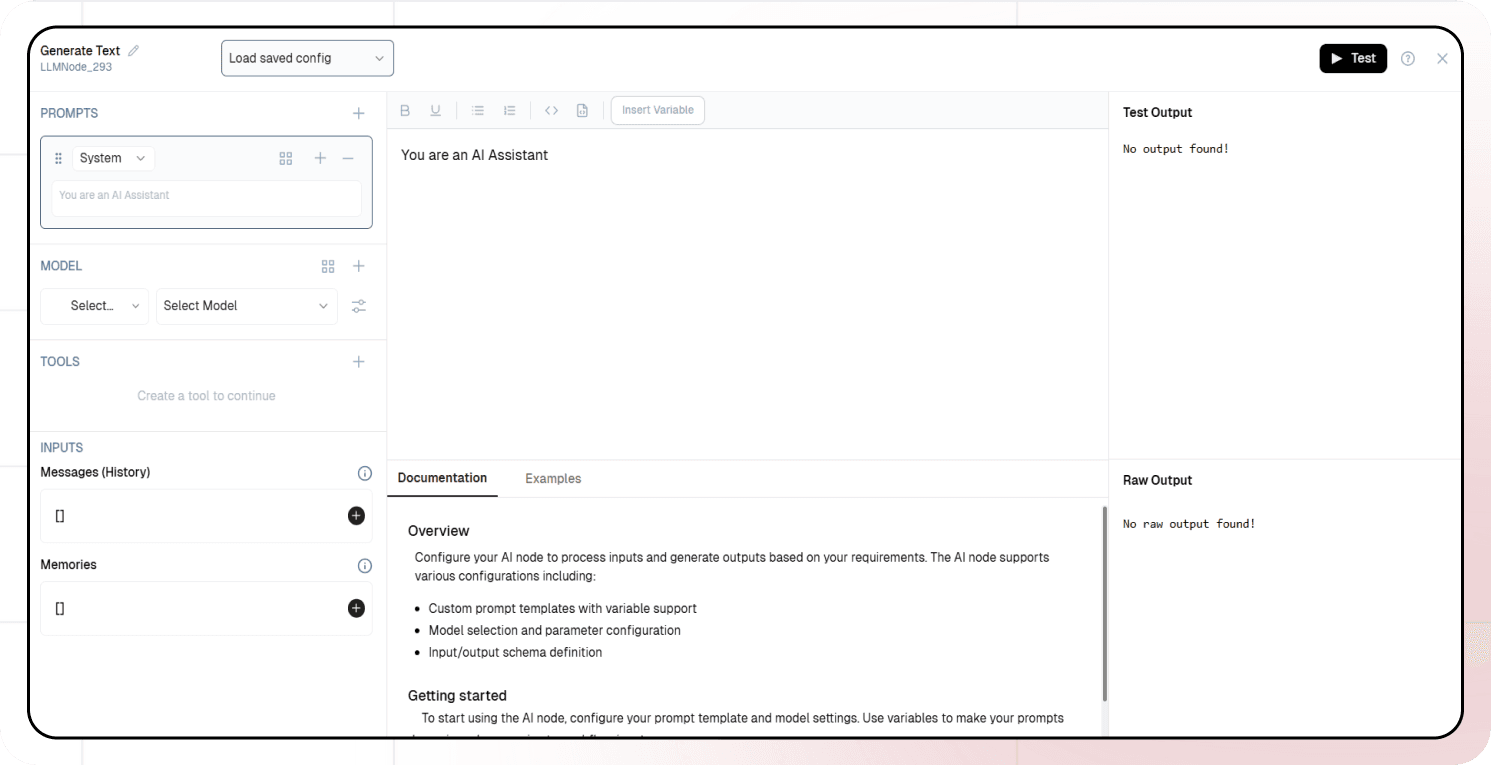

5. Text LLM Node

- Finally, we can go ahead and add the Text LLM node to generate responses based on the extracted text.

- Configure the Text LLM node with the extracted text and generate responses based on the user's queries and only answer based on the extracted text.

- Set your prompts and responses to generate the answers based on the extracted text.

6. Chat Response Node

- Finally, configure the Chat Response node to send the generated responses to the chatbot widget.

11. Test and Deploy Flow

Thorough testing ensures your chatbot provides accurate and helpful responses. Once you’ve tested the flow with your chatbot widget, click the Deploy button to make it live. Here you pass the deployment message.

You've successfully built your own intelligent chatbot widget to answer questions based on your media files!