AI Recipe Maker with Memory Store

Difficulty Level

Nodes

Tags

Try out this flow yourself at Lamatic.ai. Sign up for free and start building your own AI workflows.

Add to LamaticThis guide will help you build an AI-powered recipe generation system with a memory node. The system processes user inputs, remembers preferences, dietary restrictions, and past interactions, and generates personalized recipes. Whether you have allergies, specific tastes, or favorite ingredients, the AI tailors its suggestions to create customized cooking instructions that suit your needs.

What You'll Build

A smart recipe API that processes user inputs, remembers preferences, and generates structured recipe outputs. Each generated recipe includes the dish name, ingredients, and step-by-step cooking instructions while considering user-defined dietary restrictions and favorite ingredients. This API enables seamless and personalized recipe recommendations for a wide range of culinary applications.

Getting Started

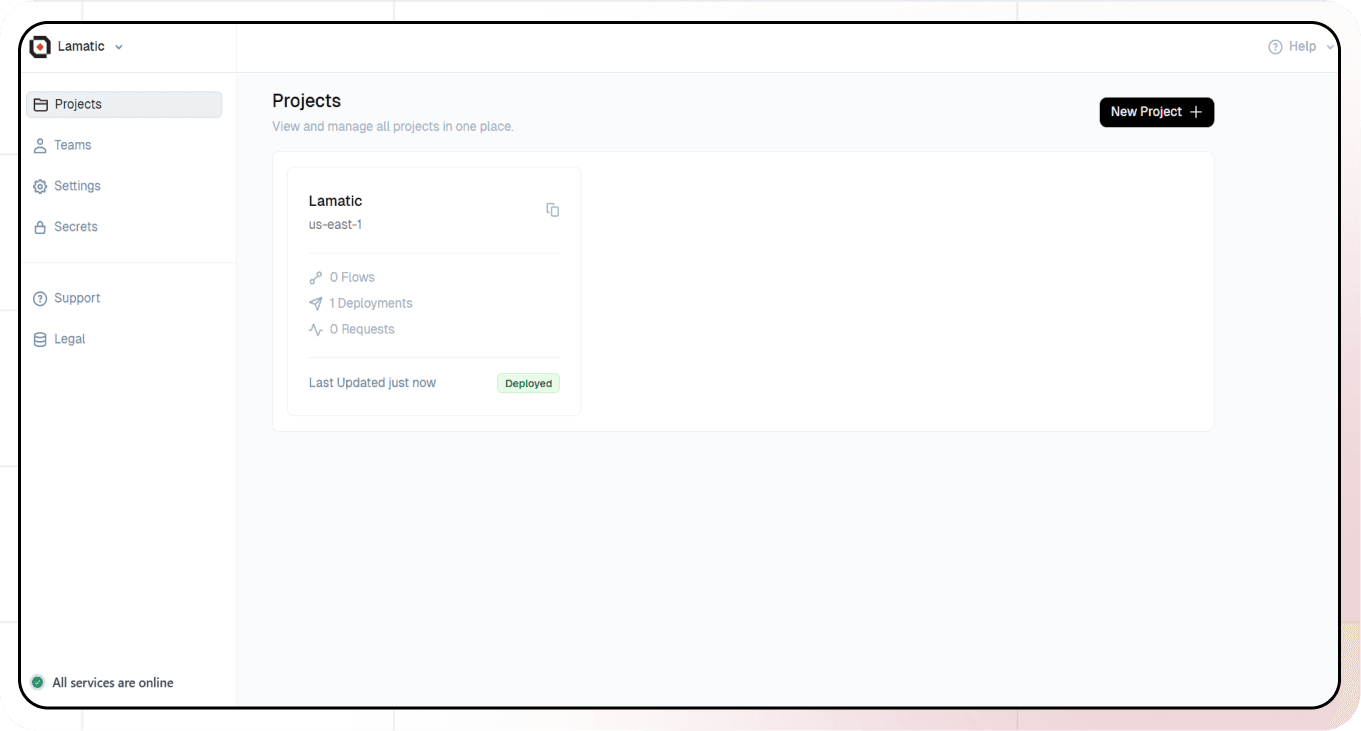

1. Project Setup

- Sign up at Lamatic.ai (opens in a new tab) and log in.

- Navigate to the Projects and click New Project or select your desired project.

- You'll see different sections like Flows, Context, and Connections

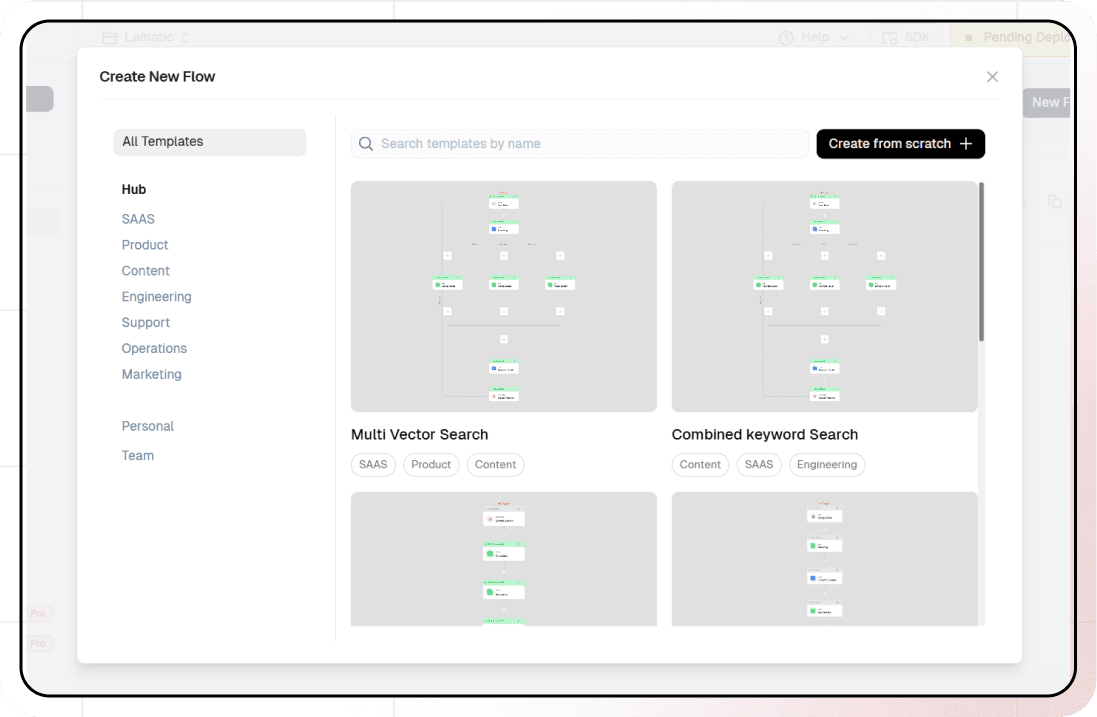

2. Creating a New Flow

- Navigate to Flows, select New Flow.

- Click Create from scratch as starting point.

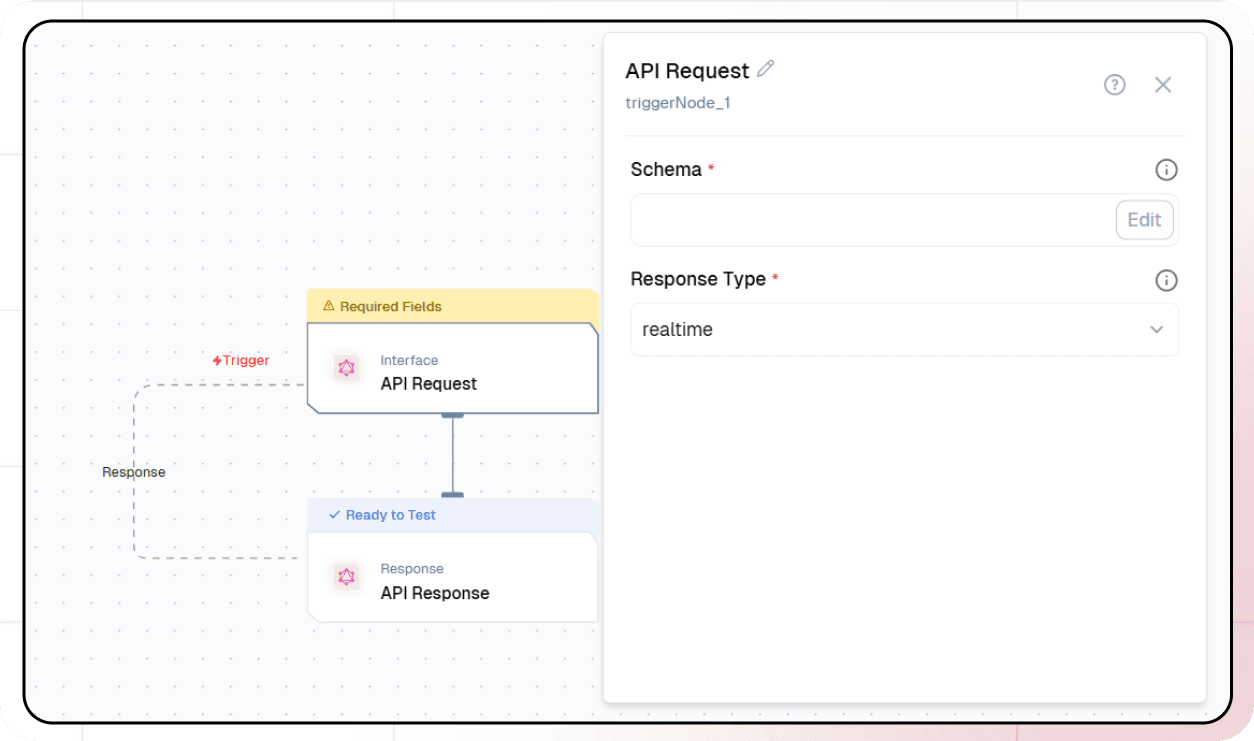

3. Setting Up Your API

- Click "Choose a Trigger"

- Select "API Request" under the interface options

- Configure your API:

- Add your Input Schema

- Set query as parameter in input schema

- Set response type to "Real-time"

- Click on save

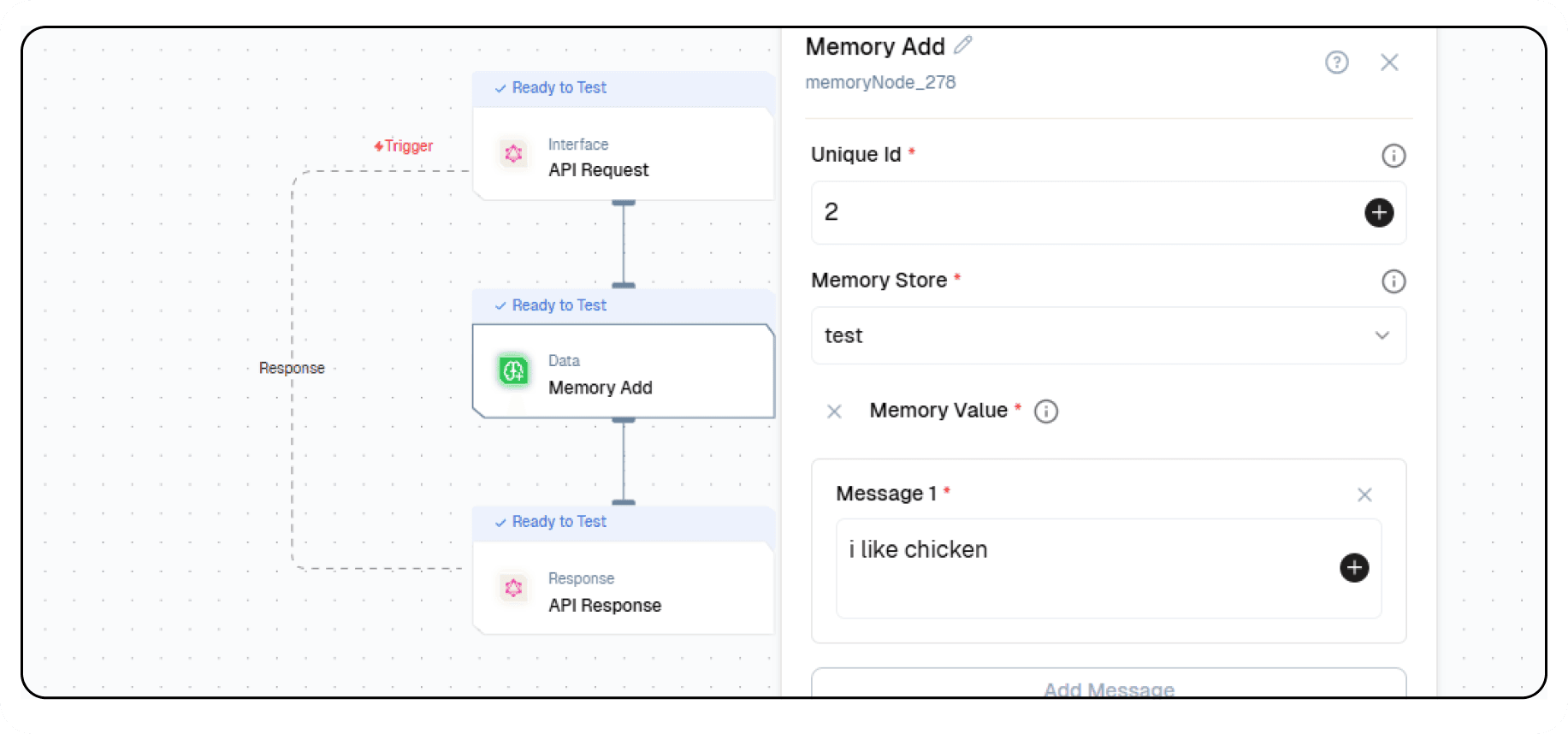

4. Adding Memory Node

Memory Add Node

- Click the + icon to add a new node.

- Choose Memory Add node.

- Enter Unique ID and Memory Store.

- Enter the Messages in the memory value.

- Configure the Embedding model:

- Select your "Open AI" credentials

- Choose "text-embeddin-3-small" as your Model

- Configure the Generative model:

- Select your "Open AI" credentials

- Choose "gpt-4o-mini" as your Model

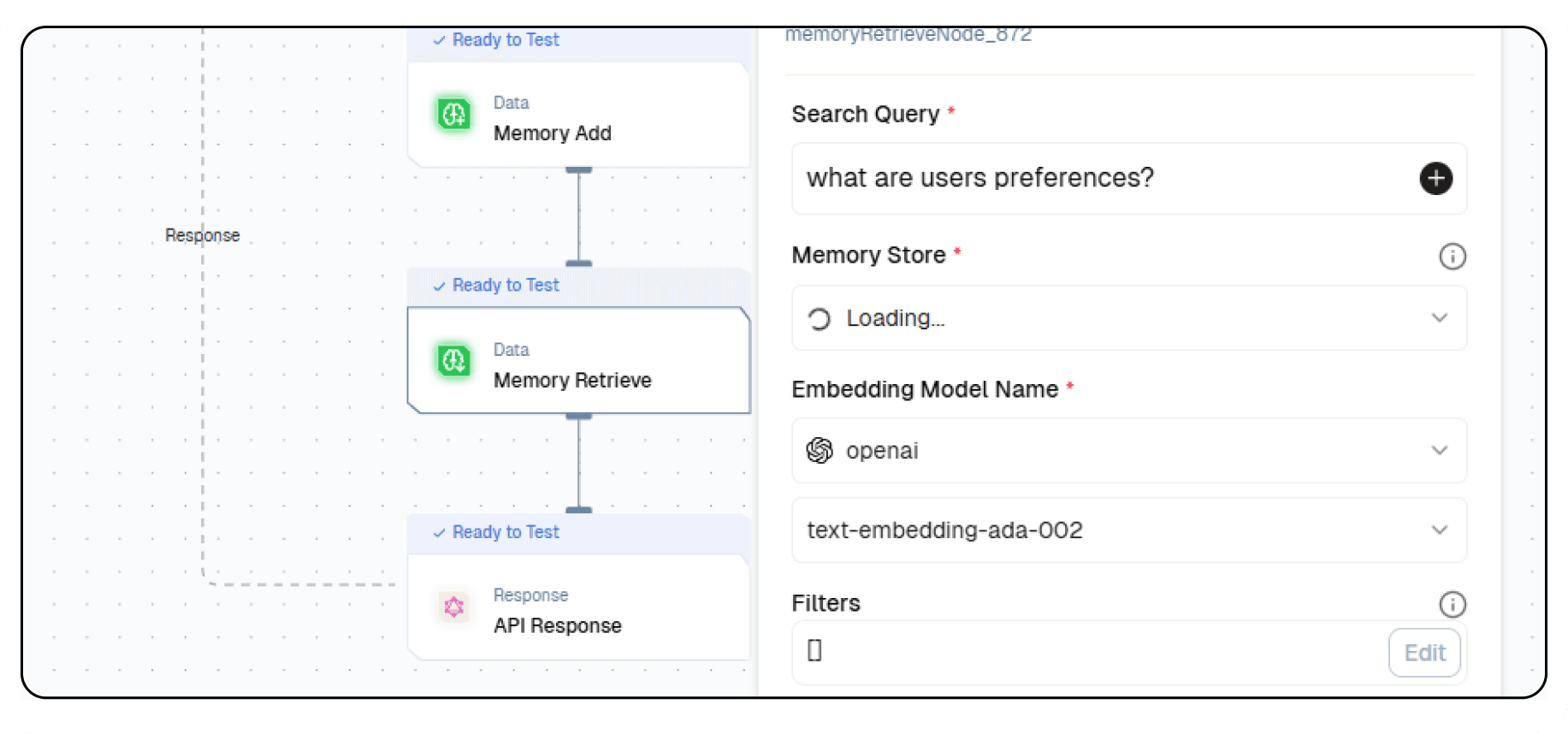

Memory Retrieve Node

- Click the + icon to add a new node.

- Choose Memory Retrieve node.

- Enter query in search query:

What are the user's preferences? - Select the Memory Store you selected in Memory Add node.

- Configure the Embedding model:

- Select your "Open AI" credentials

- Choose "text-embeddin-3-small" as your Model

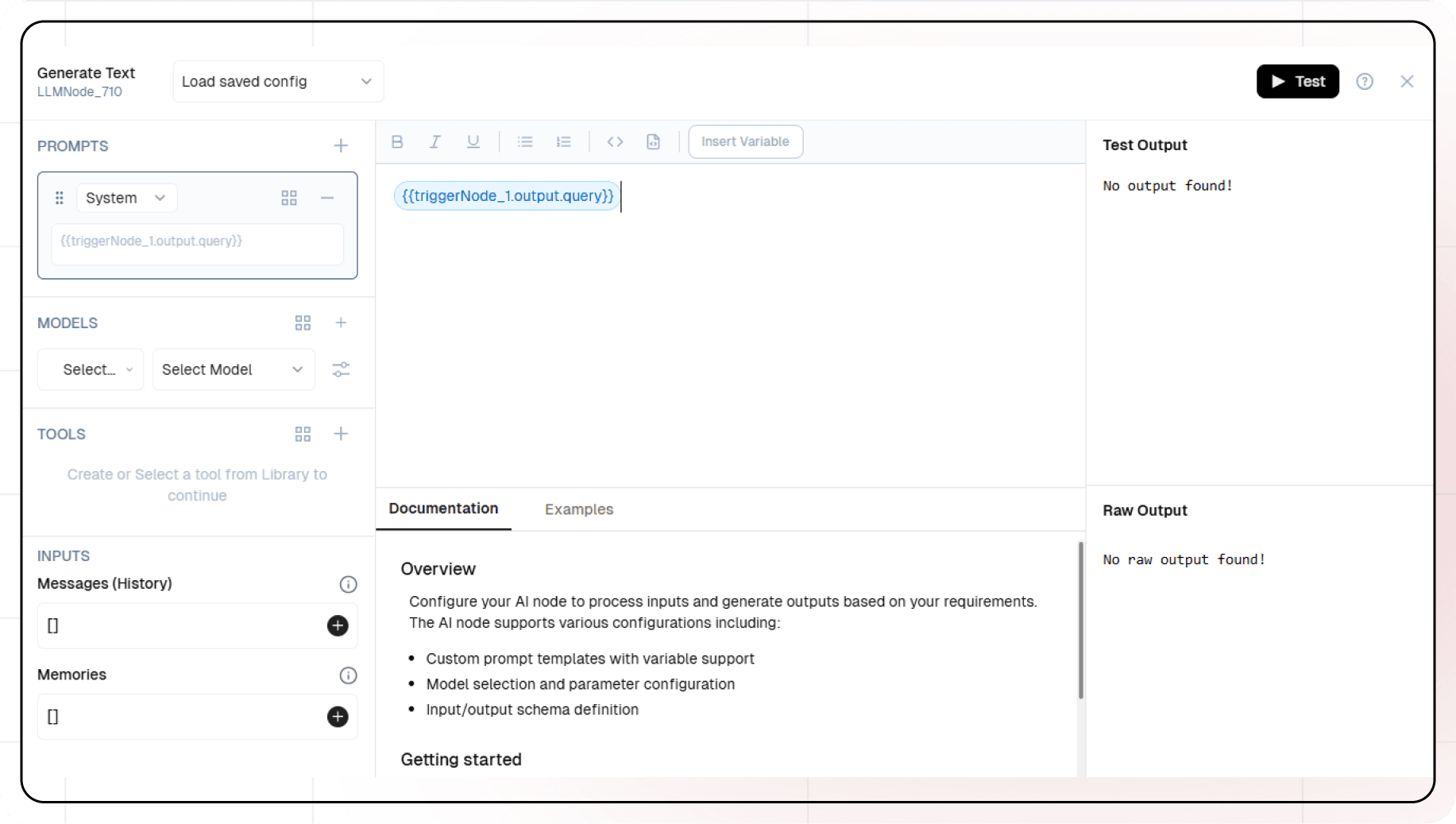

5. Adding AI LLM

-

Click the + icon to add a new node

-

Choose "Generate Text"

-

Configure the AI model:

- Select your "Open AI" credentials

- Choose "gpt-4o-mini" as your Model

-

Click on "+" under Prompts section.

-

Set up your prompt:

{{triggerNode_1.output.query}}

- You can add variables using the "insert Variable" button

- Add output from Memory Retrieve node in Memories

6. Configuring the response

- Click the API response node

- Add Output Variables by clicking the + icon

- Select variable from your Generate Text Node

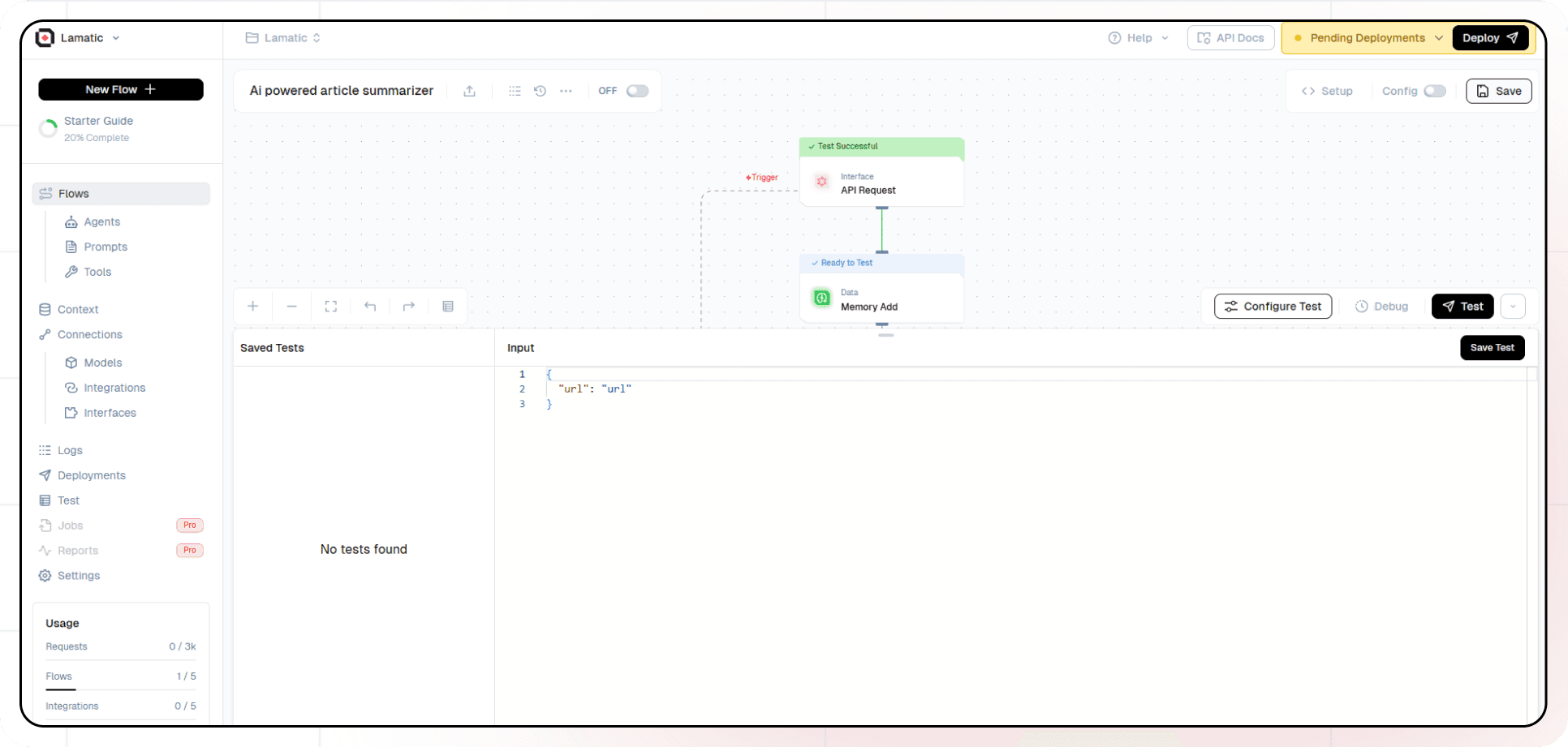

7. Test the flow

- Click on 'API Request' trigger node

- Click on Configure test

- Fill sample value in 'query' and click on test

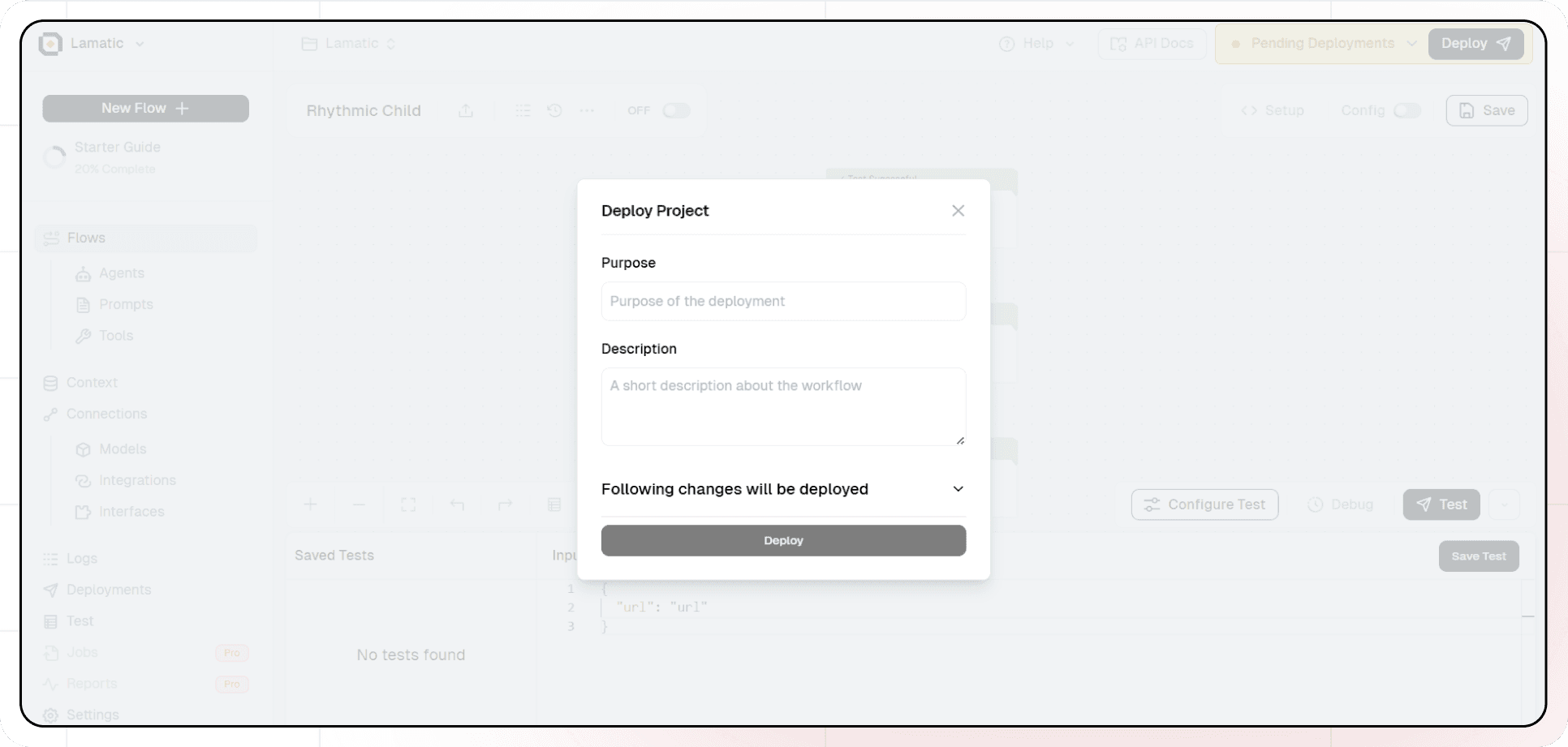

8. Deployment

- Click the Deploy button

- Your API is now ready to be integrated into Node.js or Python applications

- Your flow will run on Lamatic's global edge network for fast, scalable performance

9. What's Next?

- Experiment with different prompts

- Try other AI models

- Add more processing steps to your flow

- Integrate the API into your applications

10. Tips

- Save your tests for reuse across different scenarios

- Use consistent JSON structures for better maintainability

- Test thoroughly before deployment

Now you have a working AI-powered API! You can expand on this foundation to build more complex applications using Lamatic.ai's features.